#Build a webscraper node how to

Since we already know how to parse the HTML, the next step is to build a nice public interface we can export into a module. After you execute the above command, it will ask you a list of questions. Open a terminal or CMD and type in this command: npm init. Open it in VSCode or any other IDE you like. Take the h2.title element and show the text console.log($( "h2.title").text()) īecause I like to modularize all the things, I created cheerio-req which is basically tinyreq combined with cheerio (basically the previous two steps put together): const cheerioReq = require( "cheerio-req") To begin with, create a folder called Web-Scraper. Parse the HTML let $ = cheerio.load( "Hello world") It provides a jQuery-like interface to interact with a piece of HTML you already have. I'm using express, request, cheerio and fs.

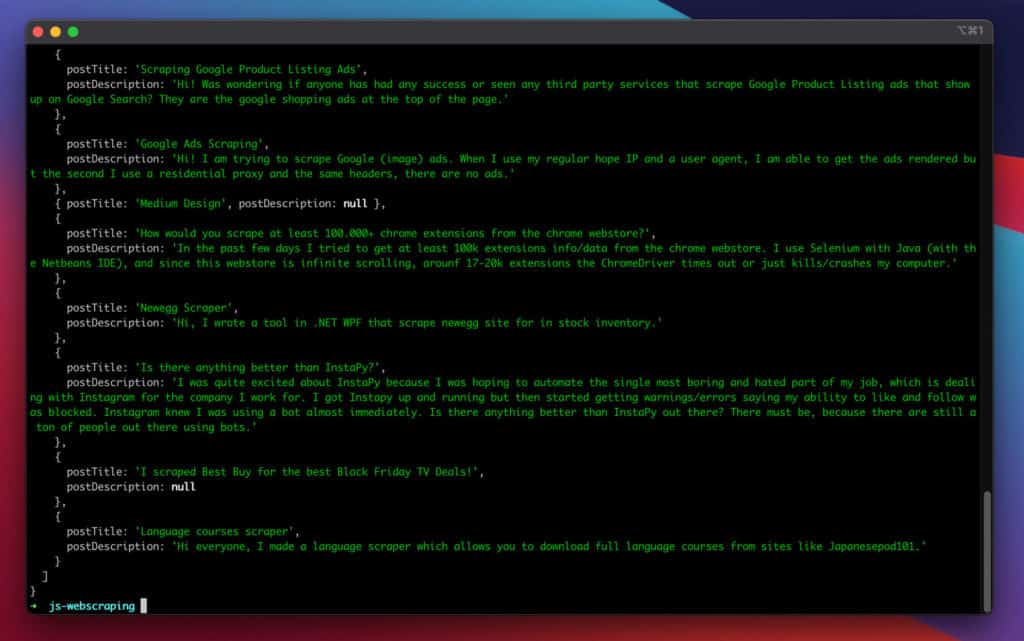

Once we have a piece of HTML, we need to parse it. I'm trying to make a simple nodeJS webscraper and I can not figure out how to format my result in Json file. Tinyreq is actually a friendlier wrapper around the native http.request built-in solution. It's designed to be really simple to use and still is quite minimalist. Because I often scrape random websites, I created yet another scraper: scrape-it a Node.js scraper for humans. Using this module, you can easily get the HTML rendered by the server from a web page: const request = require( "tinyreq") Ĭonsole.log(err || body) // Print out the HTML In Node.js, all these three steps are quite easy because the functionality is already made for us in different modules, by different developers.

Like always, I recommend choosing simple/small modules - I wrote a tiny package that does it: tinyreq.

#Build a webscraper node install

First, you will create a project root directory and then install the required dependencies. There are a lot of modules doing that that. Step 1 Setting Up the Web Scraper With Node.js installed, you can begin setting up your web scraper. To load the web page, we need to use a library that makes HTTP(s) requests. In Node.js, all these three steps are quite easy because the functionality is already made for us in different modules, by different developers.īecause I often scrape random websites, I created yet another scraper: scrape-it – a Node.js scraper for humans.

Sometimes we need to collect information from different web pages automagically.

0 kommentar(er)

0 kommentar(er)